Add a Node Group for Anjuna

In this section you will add a new Node Group to your EKS Cluster capable of running AWS Nitro Enclaves and Anjuna.

Launch Template

In order to add an Anjuna capable Node Group to your EKS Cluster, you will first need to define a new Launch Template.

Some things are important to be considered beforehand:

-

How much memory of those Nodes should be reserved for Enclaves?

-

How many

vCPUsof those Nodes should be reserved for Enclaves? -

What is the needed hugepage size?

-

And finally, what is the Instance Type and Size? E.g.

c5.2xlarge

For simplicity, let’s assume that Applications are not large (less than 4GB in image size) and

you want to run at least 2 Enclaves in each node. Then:

-

8GBof memory should be enough; -

4 vCPUsis enough; -

2 Mibhugepage size is sufficient; then -

A

c5.2xlargeNode is ideal.

Following, you will use these values to setup the Launch Template.

User Data

From the anjuna-tools folder, please define the environment variables for the settings above

and some additional information relevant for the next steps:

export nitro_reserved_mem_mb=8192

export nitro_reserved_cpu=4

export nitro_huge_page_size=2Mi

export nitro_instance_type=c5.2xlarge

export nitro_allocator_gz_b64=$(wget https://raw.githubusercontent.com/aws/aws-nitro-enclaves-cli/v1.2.0/bootstrap/nitro-enclaves-allocator -O- | gzip | base64 -w0)

export nitro_allocator_service_b64=$(wget https://raw.githubusercontent.com/aws/aws-nitro-enclaves-cli/v1.2.0/bootstrap/nitro-enclaves-allocator.service -O- | sed "s|usr/bin|usr/local/sbin|" | base64 -w0)

export vars='$nitro_reserved_cpu $nitro_reserved_mem_mb $nitro_huge_page_size $nitro_allocator_gz_b64 $nitro_allocator_service_b64'

export user_data=$(envsubst "$vars" < terraform/enclave-node-userdata.sh.tpl | sed 's/\$\$/\$/')

export CLUSTER_ARN=$(kubectl config view --minify -o jsonpath='{.contexts[0].context.cluster}')

export CLUSTER_NAME=$(echo $CLUSTER_ARN | awk -F/ '{print $NF}')

export CLUSTER_REGION=$(echo $CLUSTER_ARN | awk -F: '{print $4}')Then, run the commands below to prepare the Launch Template’s User Data in a MIME multi-part file:

|

For instructions to set up pre-existing clusters with RedHat Enterprise Linux, please contact support@anjuna.io. |

-

Amazon Linux 2

-

Amazon Linux 2023

cat <<EOF > user_data.mime

MIME-Version: 1.0

Content-Type: multipart/mixed; boundary="==MYBOUNDARY=="

--==MYBOUNDARY==

Content-Type: text/x-shellscript; charset="us-ascii"

${user_data}

--==MYBOUNDARY==--

EOFcat <<EOF > user_data.mime

MIME-Version: 1.0

Content-Type: multipart/mixed; boundary="==MYBOUNDARY=="

--==MYBOUNDARY==

Content-Type: text/x-shellscript; charset="us-ascii"

${user_data}

--==MYBOUNDARY==

Content-Type: text/yaml; charset="us-ascii"

apiVersion: node.eks.aws/v1alpha1

kind: NodeConfig

spec:

cluster:

name: ${CLUSTER_NAME}

apiServerEndpoint: $(aws eks describe-cluster --name $CLUSTER_NAME --query "cluster.endpoint" --output text --region $CLUSTER_REGION)

certificateAuthority: $(aws eks describe-cluster --name $CLUSTER_NAME --query "cluster.certificateAuthority.data" --output text --region $CLUSTER_REGION)

cidr: $(aws eks describe-cluster --name $CLUSTER_NAME --query "cluster.kubernetesNetworkConfig.serviceIpv4Cidr" --output text --region $CLUSTER_REGION)

ipFamily: $(aws eks describe-cluster --name $CLUSTER_NAME --query "cluster.kubernetesNetworkConfig.ipFamily" --output text --region $CLUSTER_REGION)

--==MYBOUNDARY==--

EOF|

Amazon Linux 2023 introduced the |

Launch Template Config

Run the command below to prepare the Launch Template Config JSON file:

cat <<EOF > launch-template-config.json

{

"InstanceType": "c5.2xlarge",

"EnclaveOptions": {"Enabled": true},

"UserData": "$(cat user_data.mime | base64 -w0)",

"MetadataOptions": {

"HttpEndpoint": "enabled",

"HttpPutResponseHopLimit": 2,

"HttpTokens": "optional"

}

}

EOF

|

Create The Launch Template

Run the command below to create the Launch Template. Make sure to create it in the same region as your EKS cluster.

-

Amazon Linux 2

-

Amazon Linux 2023

aws ec2 create-launch-template \

--launch-template-name anjuna-eks-al2 \

--launch-template-data file://launch-template-config.json \

--region $CLUSTER_REGIONaws ec2 create-launch-template \

--launch-template-name anjuna-eks-al2023 \

--launch-template-data file://launch-template-config.json \

--region $CLUSTER_REGIONNode Group

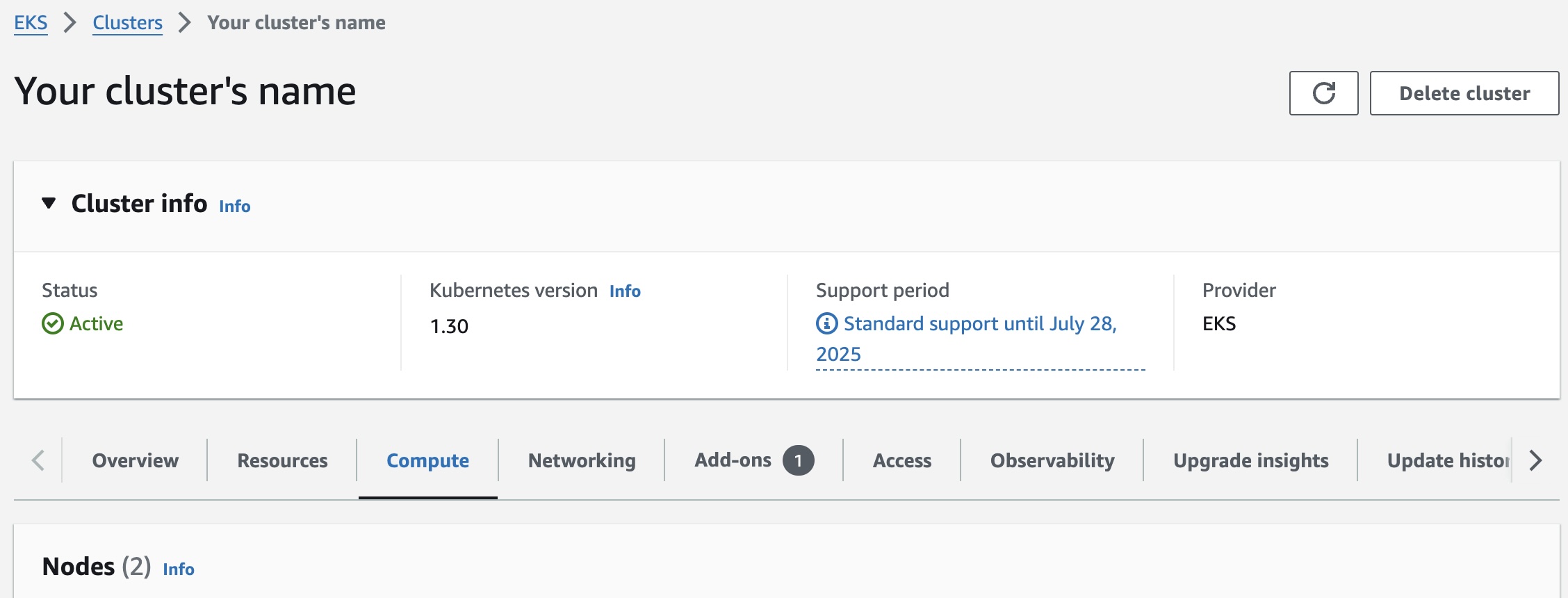

With a Launch Template that supports both AWS Nitro and Anjuna, you should now be able to configure a new Node Group via the AWS Web Console:

-

Access your EKS cluster page. E.g.:

-

On the

Computetab, find theNode Groupspanel and click onAdd node groupbutton; -

On the

Node group configurationpanel:-

Assign a unique

Nameto the node group; -

Select the IAM role that will be used by the Nodes;

-

-

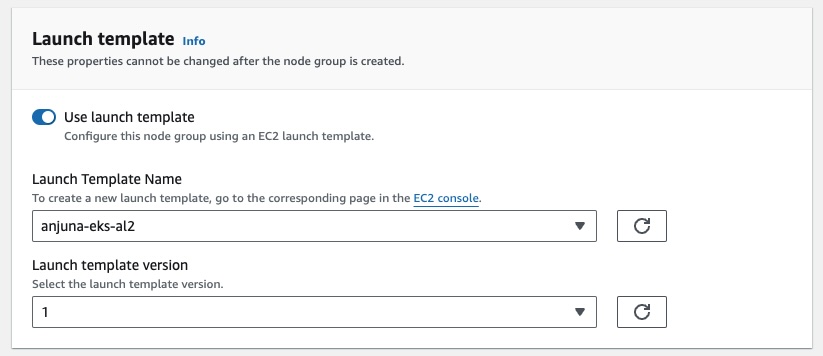

On the

Launch templatepanel, select the option to use a launch template and then select the Anjuna launch template you created above. E.g.:

-

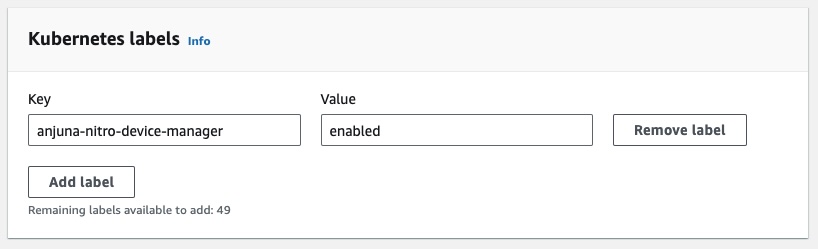

On the

Kubernetes labelspanel:-

Click on the

Add labelbutton; -

Configure the new label

Keywithanjuna-nitro-device-manager; -

Configure the new label

Valuewithenabled;

The

anjuna-nitro-device-managerlabel determines which nodes have been properly configured to have the Anjuna Toolset installed.

-

-

Move to the next page;

-

Select

AL2orAL2023as the AMI type, depending on which launch template you created; -

Define the scaling and update configuration that best fits your needs and move to the next page;

-

Select the subnets where to best place the Node Group and move to the next page;

-

Review the Node Group configuration and click on the

Createbutton;

Notice that due to how the Launch Template is configured,

no EC2 Key Pair will be assigned to the Node.

If you wish to configure a Key Pair,

edit the launch-template-config.json file and

add a new JSON field called KeyName with the name of the Key Pair that you want to use.

|

Now, wait for the Node Group to be up and running.

Once the Node Group is up and running, you should be able to use kubectl to check

that it has been correctly configured:

$ kubectl describe nodes -l anjuna-nitro-device-manager=enabled | grep -m1 "hugepages-2Mi:"

hugepages-2Mi: 8GiThis output hugepages-2Mi: 8Gi confirms that the Nodes labelled with

anjuna-nitro-device-manager=enabled have been correctly configured

with 8Gi of 2Mi hugepages as determined above.